Chicago-based freelance journalist Marco Buscaglia had a week from hell that began with some nasty emails he received on his cellphone early Monday.

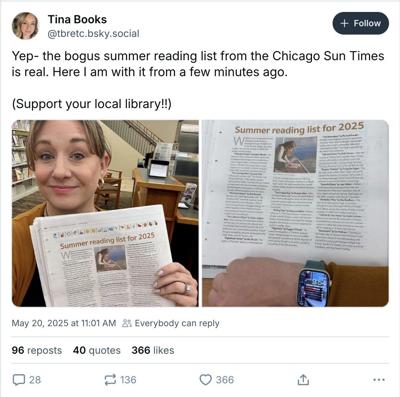

In a nutshell, the blowback he received from readers that morning at 6 a.m. pertained to an article he wrote earlier this year that was published last Sunday, about the top books to read this summer. That article contained major errors ŌĆö several of the books, 10 of 15 that he listed, donŌĆÖt exist at all. Readers immediately spotted the problems.

ItŌĆÖs the kind of calamity that gives many of us in the media shivers.

As it turns out, Buscaglia relied on content generated by artificial intelligence (AI) to write his article. The AI he used, including a tool named Claude, contained bad information.

BuscagliaŌĆÖs erroneous article was featured in a special ŌĆ£advertorialŌĆØ section called the Heat Index guide to the best of summer, syndicated by a third party and picked up by two widely read U.S. newspapers, and the Philadelphia Inquirer.

It was disastrous for Buscaglia, 56, a veteran in journalism for 33 years. He told me in a telephone interview this week from his base in Chicago that he takes full responsibility for what went wrong here, admitting that he didnŌĆÖt do his due diligence by fact-checking the information he gleaned from AI.

ŌĆ£The fact that I completely dropped the ball on this, (not) checking up on it, makes me feel awful and incredibly embarrassed,ŌĆØ he said.

He later added: ŌĆ£I didnŌĆÖt do the leg work to follow up and make sure all this stuff was legit.ŌĆØ

While he sat in bed reading those awful emails Monday and pieced together what had happened, he felt like a ŌĆ£cartoon characterŌĆØ blasted through the stomach by a cannon ball, walking around with a gigantic hole in his stomach for the rest of the day.

ŌĆ£I was devastated,ŌĆØ he said. “ItŌĆÖs been a couple of really bad days here, but I canŌĆÖt say I donŌĆÖt deserve it.”

Paramount in his thoughts was the notion that what he did fell well below the standards of the Inquirer and Sun-Times.

While AI can be a powerful tool that can assist journalists in some scenarios, as public editor I would state that this unfortunate case demonstrates that AI can also be quite fraught. AI can contain flaws and must be handled with caution by journalists.

When things go wrong, like the books fiasco, it can undermine the media’s credibility in a climate where public trust is already shaky.

The special Heat Index section was produced and licensed by a U.S. operation, King Features, which is owned by the large magazine outlet, Hearst. A spokesperson for the company that owns the Sun-Times said in a statement that the content was provided by the third party and not reviewed by the Sun-Times, but these oversight steps will be looked into more carefully for the future and a new AI policy is also being worked on for the Sun-Times.

Buscaglia said he had used AI before writing his book summaries and was familiar with AI ŌĆ£from a laymanŌĆÖsŌĆØ perspective: he assumed it was akin to a ŌĆ£glorified search engine.ŌĆØ

It was only after his mishap this week that he delved deeper into how this technology works. He told me thatŌĆÖs when he felt ŌĆ£incredibly na├»veŌĆØ and that he should have known more about AI while using it.

Generative artificial intelligence relies on large language models (LLMs) to create content, such as images, text and graphics. These LLMs are trained by massive amounts of digital data ŌĆ£scrapedŌĆØ from the internet.

Flaws with AI come when incidents, sometimes called ŌĆ£hallucinationsŌĆØ occur. ThatŌĆÖs where AI simply invents facts. This has even caused significant problems in court here in Canada where, in one example, a lawyer relied on legal cases .

Full disclosure: the Star uses AI for processes such as tracking traffic to our website, . But we have a strict AI policy — internal and in our publicly accessible Torstar Journalistic Standards Guide.

Among the rules stated: human verification of any AI-generated information or content is always required in our newsrooms. In addition, all original journalism must originate and be authored by a human. AI ŌĆ£must not be used as a primary source for facts or information.ŌĆØ

Since stepping into her role last summer, Nicole MacIntyre, the StarŌĆÖs editor-in-chief has spoken publicly about her concerns around AI and its impact on journalism.

ŌĆ£I said then ŌĆö and still believe ŌĆö that we must harness the benefits of this technology cautiously, with public trust always at the forefront.

“Since then, IŌĆÖve immersed myself in the topic, watching closely as newsrooms around the world experiment with AI. I’ve seen the risks, including some very public missteps that have shaken reader confidence. But IŌĆÖve also seen whatŌĆÖs possible when this technology is used responsibly and with purpose,ŌĆØ MacIntyre told me.

She went on to say the Star’s AI guidelines protect our commitment to people-powered journalism.

ŌĆ£With the right guard rails, IŌĆÖm excited about the possibilities,ŌĆØ she added.

To join the conversation set a first and last name in your user profile.

Sign in or register for free to join the Conversation